This article will focus on helping you understand what goroutines are in Golang. How the Go Scheduler works to achieve best performance for concurrency in Go. I will try my best to explain in simple language, so you can understand.

We will cover what are threads and processes in OS, what is concurrency and why achieving concurrency is hard, and how goroutines help us achieve concurrency. Then, we will take a deep dive look into the internal architecture of Golang, i.e., Go Scheduler, which manages the scheduling of multiple goroutines so that each goroutine gets a fair chance and is not blocking in nature.

Why should you care? As a developer, we should know how goroutines are scheduled or executed by the language, if we are dealing with them.

Please see, we will not cover how to implement goroutines in this article.

Concurrency

Let's take an example to understand Concurrency. Consider that at the same time, you have multiple processes that are ready to be executed. Now, how do I run them in a certain order, so that each process gets a fair chance to execute and it is not blocking other processes that are waiting for it to finish?

It's the job of the OS(Operating System) to handle this. The OS allocates a CPU for each of these processes, one at a time, for a time period. Once that time period is over, or if it gets blocked due to any I/O operation, context switching happens and another process gets scheduled to run. In this way, multiple processes are sharing the CPU and executing in an interleaved fashion to make progress.

So, concurrency is about handling multiple things happening at the same time in a random order.

Parallelism

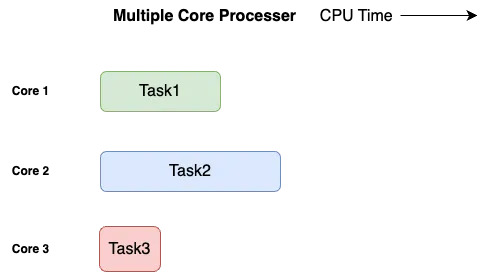

We have multiple cores/CPU in a chip; in the above example, we were only utilizing one CPU core and others are idle. That is a waste of resources, which we can use to compute faster. We send each process to a different CPU core.

So, parallelism is the ability to execute multiple computations simultaneously.

Why Golang for concurrency?

Go provides built-in support for concurrency, while other languages use an external library. Node.js uses libuv, an external C library. In Go, a higher-level abstraction is built in, which makes writing concurrent code clean and elegant.

Why do we need to build a concurrency primitive in Go?

Let's take a look back to the basics of OS. The idea of concurrency starts with threads and processes in the OS. The job of the operating system is to give an unbiased chance to each process. There are times when higher-priority processes will get the chance.

What is a Process?

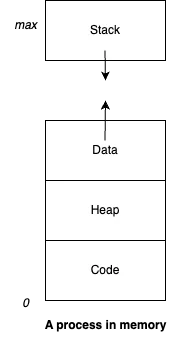

A process is an instance of a running program. It represents the execution of a program. It is allocated its own memory space. It has several regions. The code region stores compiled code. The data region stores global and static variables. The heap region is used for dynamic memory allocation. The stack region is used for local variables that are in scope of current execution. When processes are swapped out of memory and later restored, additional information must also be stored and restored. Key among them are the program counter and the value of all program registers.

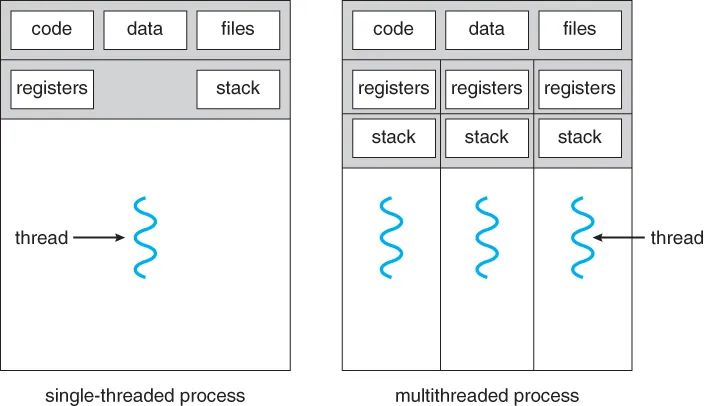

What is a Thread?

Threads are the smallest unit of CPU execution. They consist of a program counter, a stack, and a set of registers. A process can have multiple threads. Single threaded process utilizes only one thread for a process. This was how things used to work previously. Currently, multi-threaded applications have multiple threads to execute a single process. All threads share open files, data and code region.

An example, before the development of threads, the web server had to handle one request at a time. But with threads, whenever you get a request, you spin up a new thread to handle the request and go back to listening for more requests. It means we can handle multiple requests at the same time! The user requesting for the web page will load faster.

Two Types of Threads

There are two types of threads that are managed by OS.

- User Thread: These are the threads that are created by the developers to put into application. They live above kernel threads.

- Kernel Thread: These are the threads handled by the kernel to perform simultaneous tasks.

Limitations of the Threads

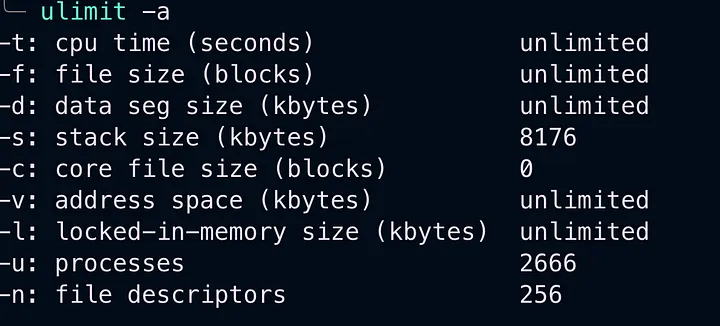

In the picture above, each thread has its own stack. The issue is each thread comes with a fixed stack size; in my machine it is 8KB. Consider if I have 8GB of memory, I can create 1000 threads. This fixed stack size limits the number of threads we can create.

Also, if we scale the number of threads too high, we hit the C10K problem, which states as we increase the number of threads, our application is going to become unresponsive as the scheduler cycle is going to increase to handle a large number of threads.

Memory Access Synchronization — Meet Deadlock

Concurrency is hard. As we discussed above, all threads share the same address space, i.e., memory. They share the data and heap region of a process. So, threads communicate between each other by sharing memory. This sharing of memory creates complexity. To deal with this problem, the OS implements a locking system so that once a thread wants to access a shared memory. It will place a lock on it. And unlocks it when it has done its job of either reading or updating the memory. With locking, what we have done is synchronize the execution of threads that requires same shared memory. This locking system can lead to a problem common in OS, known as Deadlock.

Goroutines

Goroutines are the lightweight threads that are managed by the Go runtime rather than the operating system kernel. Goroutines are user level threads. Go runtime can handle thousands and millions of goroutines in a single process. It is like mind blowing.

Goroutines start with 2KB of stack size only, compared to 8KB of stack size for kernel level threads. Goroutines are much cheaper to create than traditional threads, making it easy to create many of them to perform concurrent tasks.

To handle the limitations of sharing memory we discussed above. They communicate data via channels.

Go is a programming language that was designed with concurrency in mind, and it includes a built-in implementation of CSP. In Go, channels are first-class citizens and are used to communicate between goroutines (lightweight threads) that execute concurrently.

Communicating Sequential Processes (CSP) is a concurrency model that allows concurrent processes to communicate with each other through channels. This model was introduced by Tony Hoare in his 1978 paper, “Communicating Sequential Processes.”

There are also other synchronization primitives created by Go to handle race conditions, such as wait groups and mutexes, but these are usually only necessary in more complex scenarios.

Hence, you can write concurrent programs that are safe and easy to reason about.

Go Scheduler

As goroutines are created by the user. Go scheduler manages the execution of goroutines. The scheduler determines which goroutine should get a chance to be executed, it does so by scheduling them onto operating system threads. The scheduler uses a round-robin algorithm to ensure fairness, so each goroutine gets an equal share of CPU time.

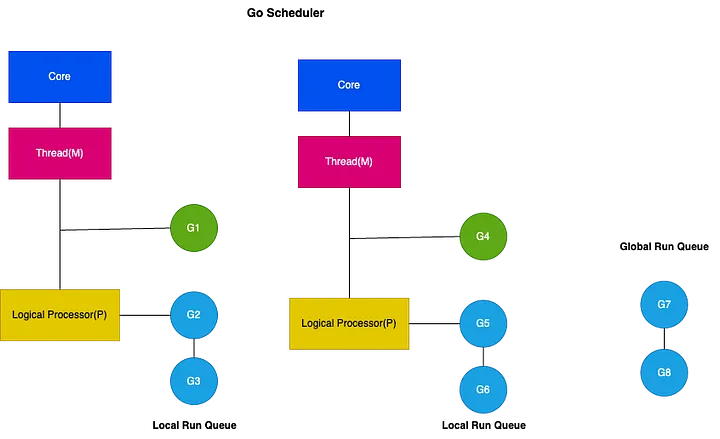

It is also known as the M:N scheduler. Go runtime creates a number of OS threads, equal to GOMAXPROCS. By default, it is set to the number of cores/processors available in CPU. Go scheduler distributes runnable goroutines over the multiple worker OS threads. At any time, N goroutines can be scheduled on M OS threads.

Consider a goroutine that is taking a lot of CPU time. It can block other goroutines. To deal with this problem, go scheduler implements asynchronous preemption. The asynchronous preemption is triggered based on time condition. If a goroutine is taking more than 10ms, Go will trigger the preemption of it.

Components of A Go Scheduler

- Global Run Queue: It holds all the goroutines that are ready to be executed. When a go routine is created using “go” keyword, they are put into this queue.

- Operating System Threads(M): They are the underlying resource that executes the goroutines onto it. The number of threads is controlled by the GOMAXPROCS environment variable.

- Local Run Queue(N): An OS thread has its own Local Run Queue. It holds all the goroutines that are ready to be executed on this thread. When a thread has no more goroutines to execute, it will steal goroutines from other threads’ local run queues.

- Work Stealing: When a thread has no more goroutines to execute, it will steal the goroutines from other threads local run queue. This helps to have balanced workload among all threads.

- Blocking Operations: The scheduler has support for blocking operations, such as I/O and system calls. It moves the blocking goroutine to a separate thread and executes the other goroutines in the local run queue.

- Garbage Collection: The Go scheduler works closely with the garbage collector to manage memory allocation and deallocation. When a goroutine is finished executing, the scheduler notifies the garbage collector, which frees up any memory that the goroutine was using.

This ensures that each goroutine gets a fair chance of CPU time, the workload of goroutines is balanced across operating system threads. This is what unlocks the power of a devloper to write high performant, efficient concurrent program.